Capabilities of AI Transformers#

Background#

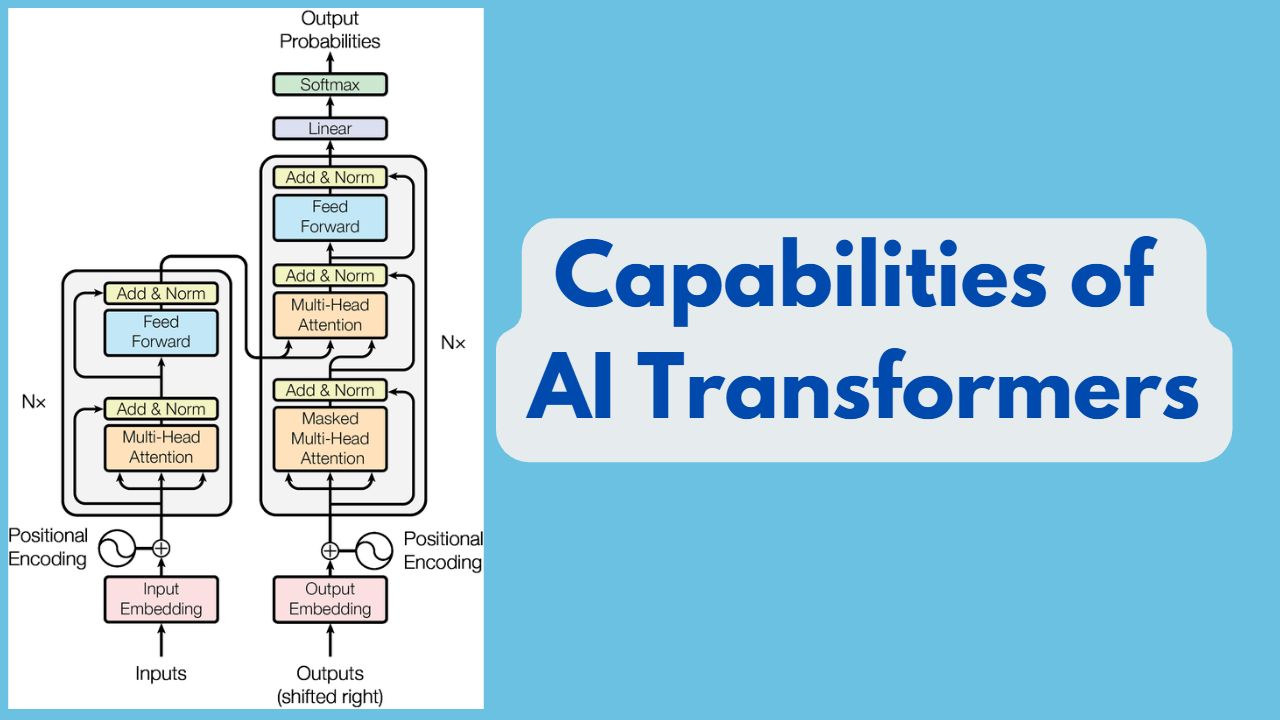

Whether GPT, ChatGPT, DALL-E, Whisper, Satablity AI or whatever significant you see in the AI worlds nowdays it is because of Transformer Architecture. Transformers are a type of neural network architecture that have several properties that make them effective for modeling data with long-range dependencies. They generally feature a combination of multi-headed attention mechanisms, residual connections, layer normalization, feedforward connections, and positional embeddings.

Precursors of Transformers were RNN, LSTM, and GRU architecture. Transformers are based on the 2017 research paper “Attention is All You Need”

Initially, Transformers were used for NLP-related tasks. Slowly researchers started exploring the power of the Transformer Architectures and as of 2023 these are used for hundreds of tasks in different AI domains of technologies like:

- Text Models (NLP, NLU, NLG)

- Vision Models (Computer Vision)

- Audio Models (Audio Processing, Classification, Audio Generation)

- Reinforcement (RL) Models

- Time-series Models

- Multimodal: OCR (extract information from scanned documents), video classification, visual QA, table data question answering

- Graph Models

Starting the journey in 2017, as of now (2023) we have approx 200 Transformer based architectures proposed by various researchers for various purposes. Using these architecture and various benchmark datasets thousands of models have been created which give SOTA performance on various tasks. Based on your need you choose which architecture can help you meet your project objective. There are high chances you will get some pre-trained models which you can use without training (Zero-shot) or small finetuning (one-shot or few-shot) efforts. For that you need to explore Huggingface and PaperWithCode

This articles list all the major Transformer related researcher paper, their object, and capabilities.

Note : Name starting with * are not Transformers, most of them are pretransformer age architectures.

Capabilities of AI Transformers#

| Sno | Transformer | Objective | Summary | NLP Tasks | CV Tasks |

|---|---|---|---|---|---|

| 1 | *AlexNet | Image Classification | A deep convolutional neural network architecture for image classification tasks. | - | Image Classification, Object Detection |

| 2 | *VGG16 | Visual Geometry Group Network (16 layers) | A deep CNN model with 16 convolutional layers developed by the Visual Geometry Group at Oxford University. | - | Image Classification, Object Detection |

| 3 | *VGG19 | Visual Geometry Group Network (19 layers) | A deep CNN model with 19 convolutional layers, an extended version of VGG16. | - | Image Classification, Object Detection |

| 4 | *ResNet | Residual Networks | A deep CNN architecture that introduces residual connections to alleviate the vanishing gradient problem. | - | Image Classification, Object Detection |

| 5 | *InceptionResNet | Combination of Inception and ResNet | A hybrid CNN model that combines the strengths of the Inception and ResNet architectures. | - | Image Classification, Object Detection |

| 6 | *ConvNeXt | Improved Convolutional Neural Network | A convolutional neural network architecture that aims to capture richer spatial relationships in images. | - | Image Classification, Object Detection |

| 7 | *DenseNet | Dense Connections in Convolutional Networks | A densely connected convolutional neural network architecture that encourages feature reuse and reduces the number of parameters. | - | Image Classification, Object Detection |

| 8 | *MobileNetV1 | Mobile-oriented CNN Architecture | A lightweight convolutional neural network architecture designed for mobile and embedded devices. | - | Image Classification, Object Detection |

| 9 | *Xception | Extreme Inception | A deep CNN architecture that replaces the standard Inception modules with depthwise separable convolutions. | - | Image Classification, Object Detection |

| 10 | EncoderDecoder | Sequence-to-sequence modeling | A transformer-based model architecture that combines encoder and decoder for sequence-to-sequence tasks such as machine translation. | Machine Translation, Text Summarization | - |

| 11 | *MobileNetV2 | Improved MobileNet Architecture | An enhanced version of MobileNet with improved performance and efficiency. | - | Image Classification, Object Detection |

| 12 | Data2Vec | Embedding data tables | A transformer-based model for embedding and encoding structured data tables. | Tabular Data Embedding, Data Table Encoding | - |

| 13 | GPT | Language modeling and text generation | A transformer-based model trained on a large corpus to generate coherent and contextually relevant text. | Text Generation, Text Completion, Language Modeling | - |

| 14 | BERT | Pre-training and fine-tuning on various NLP tasks | A transformer-based model widely used for pre-training and fine-tuning on NLP tasks. | Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 15 | MarianMT | Multilingual Neural Machine Translation | A multilingual neural machine translation model based on the Marian framework. | Machine Translation | - |

| 16 | BiT | Vision transformer for image classification | A vision transformer model pre-trained on large-scale datasets for image classification tasks. | - | Image Classification, Object Detection, Semantic Segmentation |

| 17 | Transformer-XL | Transformer model with extended context | A transformer model architecture that extends the context window, enabling longer-range dependencies. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 18 | XLM | Cross-lingual Language Model | A transformer-based model for cross-lingual language understanding and machine translation. | Cross-lingual Language Understanding, Machine Translation | - |

| 19 | CTRL | Text generation with control codes | A transformer-based model that allows fine-grained control over generated text using control codes. | Text Generation, Controlled Text Generation | - |

| 20 | GPT-2 | Language modeling and text generation | A transformer-based model similar to GPT but with a smaller architecture, trained on a large corpus to generate coherent and contextually relevant text. | Text Generation, Text Completion, Language Modeling | - |

| 21 | Funnel Transformer | Improving the efficiency and effectiveness of transformers | A transformer-based model architecture that reduces the computational cost of transformers while maintaining their effectiveness. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 22 | *EfficientNet B0 | Efficient and Scalable CNN | A family of convolutional neural network architectures that achieve high accuracy with fewer parameters and computations. | - | Image Classification, Object Detection |

| 23 | ALBERT | Improve the efficiency of BERT | A lite version of BERT that uses parameter reduction techniques to achieve faster training and lower memory consumption. | Classification, Translation, Named Entity Recognition (NER) | - |

| 24 | EfficientNet | Efficient convolutional neural network architecture | A convolutional neural network architecture that achieves state-of-the-art performance with significantly fewer parameters. | - | Image Classification, Object Detection, Semantic Segmentation |

| 25 | MobileNetV3 | Efficient Mobile Neural Network for Computer Vision | A lightweight and efficient neural network architecture designed for computer vision tasks on mobile devices. | Image Classification, Object Detection, Semantic Segmentation | - |

| 26 | Nezha | Neural Encoder for Zero-shot Transfer Learning | A transformer-based model that enables zero-shot transfer learning by learning a shared semantic space. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 27 | BART | Text generation and summarization | A denoising autoencoder model that can be used for text generation and summarization tasks. | Text Generation, Summarization | - |

| 28 | ERNIE | Enhanced representation through knowledge integration | A transformer-based model that enhances representation learning by integrating external knowledge sources. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 29 | ErnieM | Enhanced representation through multitask learning | A multitask learning framework that enhances representation learning by jointly training multiple downstream NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 30 | FlauBERT | French language representation learning | A transformer-based model specifically trained for French language representation learning tasks. | French Language Processing, Text Classification | - |

| 31 | LXMERT | Vision and Language Multimodal Transformer | A multimodal transformer model that combines vision and language information for various tasks. | Visual Question Answering (VQA), Visual Dialog, Image Captioning, Visual Grounding | - |

| 32 | Pegasus | Pre-training with Extracted Gap Sentences for Abstractive Summarization | A transformer-based model trained for abstractive text summarization tasks. | Text Summarization | - |

| 33 | XLNet | Generalized Autoregressive Pretraining | A transformer-based model that leverages permutation-based training to learn bidirectional context. | Language Modeling, Text Classification | - |

| 34 | BioGpt | Processing biomedical text | A variant of the GPT model specifically designed for processing biomedical text. | Biomedical Text Processing, Named Entity Recognition (NER), Clinical Text Understanding | - |

| 35 | Hubert | Automatic speech recognition with transformers | A transformer-based model designed for automatic speech recognition tasks. | Automatic Speech Recognition | - |

| 36 | REALM | Retrieval-Augmented Language Model | A language model augmented with a dense retrieval mechanism to improve performance on text retrieval tasks. | Information Retrieval, Text Classification, Question Answering (QA) | - |

| 37 | SpeechToTextTransformer | Transformer for Speech-to-Text Conversion | A transformer-based model designed specifically for speech-to-text conversion tasks. | Speech-to-Text Conversion | - |

| 38 | XLM-V | Cross-lingual Language Understanding | A transformer-based model for cross-lingual language understanding, leveraging multilingual embeddings. | Cross-lingual Language Understanding | - |

| 39 | RoBERTa | Robustly optimized BERT variant | An optimized variant of BERT (Bidirectional Encoder Representations from Transformers) for various NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 40 | GPT Neo | Efficient and scalable variant of GPT | A transformer-based model architecture that provides an efficient and scalable variant of GPT for various natural language processing tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 41 | CamemBERT | French language processing and text classification | A transformer-based model specifically trained for French language processing and text classification tasks. | French Language Processing, Text Classification | - |

| 42 | DialoGPT | Conversational AI chatbot | A transformer-based model trained for generating human-like conversational responses. | Conversational AI, Chatbot | - |

| 43 | DistilBERT | Distilled version of BERT | A smaller and faster version of BERT with a similar performance on various NLP tasks. | Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 44 | LiLT | Language learning from transliterated text | A transformer-based model for language learning that utilizes transliterated text as training data. | Language Learning | - |

| 45 | LUKE | Language Understanding with Knowledge-based Entities | A model that integrates knowledge-based entities into transformer-based language understanding tasks. | Named Entity Recognition (NER), Relation Extraction, Knowledge Graph Completion | - |

| 46 | MobileBERT | Efficient BERT for Mobile and Edge Devices | A compact and efficient version of BERT designed for deployment on mobile and edge devices. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 47 | MT5 | Multilingual Text-to-Text Transfer Transformer | A transformer-based model capable of multilingual text-to-text transfer learning across various NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 48 | RAG | Retrieval-Augmented Generation | A model that combines retrieval and generation methods for open-domain question answering. | Open-Domain Question Answering | - |

| 49 | ConvBERT | Text classification and named entity recognition (NER) | A transformer-based model for text classification and named entity recognition (NER) tasks. | Classification, Named Entity Recognition (NER), Sentiment Analysis | - |

| 50 | Megatron-GPT2 | High-performance GPT-2-based language model | A high-performance GPT-2-based language model developed using the Megatron framework. | Text Generation, Text | - |

| 51 | PhoBERT | Pretrained language model for Vietnamese | A pretrained language model specifically designed for the Vietnamese language. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 52 | RoBERTa-PreLayerNorm | RoBERTa with PreLayerNorm | A variant of RoBERTa with the PreLayerNorm (PLN) technique, which improves training stability and efficiency. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 53 | BERTweet | Pre-trained BERT models for processing tweets | BERT models specifically trained on Twitter data for tweet processing tasks. | Classification, Named Entity Recognition (NER), Sentiment Analysis | - |

| 54 | mBART | Multilingual Denoising Autoencoder | A multilingual denoising autoencoder based on the BART framework, capable of generating text in multiple languages. | Text Generation, Text Completion, Multilingual Language Modeling | - |

| 55 | Megatron-BERT | High-performance BERT-based language model | A high-performance BERT-based language model developed using the Megatron framework. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 56 | SpeechToTextTransformer2 | Transformer model for Speech-to-Text Conversion | Another transformer-based model for speech-to-text conversion, providing an alternative approach. | Speech-to-Text Conversion | - |

| 57 | BERT For Sequence Generation | Text generation using BERT-based models | Fine-tuned BERT models for sequence generation tasks, such as text generation or summarization. | Text Generation, Summarization | - |

| 58 | ConvNeXT | Language modeling and text generation | A transformer-based model for language modeling and text generation tasks. | Language Modeling, Text Generation | - |

| 59 | ELECTRA | Pre-training method for language representation learning | A pre-training method that replaces masked language modeling with a generator-discriminator setup for better language representation. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 60 | Longformer | Long-range sequence modeling with transformers | A transformer-based model architecture that extends the standard transformer to handle long-range dependencies. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 61 | RegNet | Regularized Convolutional Neural Network | A convolutional neural network architecture with regularization techniques for efficient and scalable training. | - | Image Classification, Object Detection, Semantic Segmentation |

| 62 | SqueezeBERT | Lightweight BERT model with Squeeze-and-Excitation | A lightweight variant of BERT with Squeeze-and-Excitation (SE) blocks for efficient training and inference. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 63 | LayoutLM | Text and layout understanding for document analysis | A transformer-based model that combines text and layout information for document understanding tasks. | Document Understanding, OCR, Named Entity Recognition (NER) | - |

| 64 | MPNet | Megatron Pretrained Network | A model pretrained using the Megatron framework, designed for various NLP tasks with high performance. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 65 | VisualBERT | Integrating Visual Information with BERT | A BERT-based model that incorporates visual information for multimodal understanding. | - | Vision-Language Tasks, Image Captioning, Visual Question Answering (VQA) |

| 66 | Conditional DETR | Object detection and instance segmentation | A transformer-based model for object detection and instance segmentation tasks. | - | Object Detection, Instance Segmentation |

| 67 | GPTBigCode | Code generation for programming languages | A transformer-based model trained on a large corpus of code to generate code snippets or complete programs for various programming languages. | Code Generation, Programming Language Processing | - |

| 68 | M-CTC-T | Music Transcription with Transformer | A transformer-based model designed for music transcription, converting audio into musical notation. | Music Transcription | - |

| 69 | Pix2Struct | Image-to-Structure Translation | A transformer-based model for translating images into structured representations. | - | Image-to-Structure Translation |

| 70 | ProphetNet | Pretrained Sequence-to-Sequence Model | A sequence-to-sequence model pretrained for various NLP tasks, based on the transformer architecture. | Text Generation, Text Completion, Machine Translation, Summarization | - |

| 71 | SEW | Simple and Efficient Word-level language model | A word-level language model that is simple and efficient, designed for various NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 72 | T5 | Text-to-Text Transfer Transformer | A text-to-text transfer transformer model that can be fine-tuned for various NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 73 | DeBERTa | Improving the effectiveness of BERT | A transformer-based model that enhances BERT by addressing its limitations and improving performance on various NLP tasks. | Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 74 | Informer | Time series forecasting with transformers | A transformer-based model for time series forecasting tasks, capturing long-term dependencies in the data. | Time Series Forecasting | - |

| 75 | LED | Language model for efficient decoding | A transformer-based language model designed for efficient decoding, suitable for constrained environments. | Text Generation, Text Completion, Language Modeling | - |

| 76 | SwitchTransformers | Transformers with Dynamic Routing | A library that provides implementations of various transformer models with dynamic routing capabilities. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | Image Classification, Object Detection, Semantic Segmentation |

| 77 | Whisper | Unsupervised Representation Learning | A transformer-based model for unsupervised representation learning on audio data. | Speech Representation Learning | - |

| 78 | XLM-ProphetNet | Cross-lingual Language Generation | A transformer-based model for cross-lingual language generation, extending the ProphetNet architecture. | Cross-lingual Language Generation | - |

| 79 | XLM-RoBERTa | Cross-lingual Language Representation | A cross-lingual variant of RoBERTa, providing multilingual representation learning. | Cross-lingual Language Representation | - |

| 80 | Deformable DETR | Object detection and instance segmentation with deformable attention | A transformer-based model for object detection and instance segmentation tasks, incorporating deformable attention mechanisms. | - | Object Detection, Instance Segmentation |

| 81 | FNet | Image generation with Fourier features | A transformer-based model that generates images using Fourier features instead of traditional positional encodings. | - | Image Generation |

| 82 | GPTSAN-japanese | Japanese language variant of GPT for sentiment analysis | A version of GPT specifically designed and trained for sentiment analysis tasks in the Japanese language. | Japanese Language | |

| 83 | SEW-D | Deep version of Simple and Efficient Word-level language model | A deep variant of SEW for improved performance on NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 84 | CPM | Chinese language processing and text generation | A transformer-based model specifically designed for Chinese language processing and text generation tasks. | Chinese Language Processing, Text Generation | - |

| 85 | GIT | Generating informative text from structured data | A transformer-based model that generates informative text, such as explanations or summaries, from structured data inputs. | Data-to-Text Generation, Structured Data Processing | - |

| 86 | LayoutXLM | Multilingual document understanding with transformers | A transformer-based model for multilingual document understanding, incorporating text and layout information. | Multilingual Document Understanding, OCR, Named Entity Recognition (NER) | - |

| 87 | DETR | Object detection and instance segmentation | A transformer-based model for object detection and instance segmentation tasks. | - | Object Detection, Instance Segmentation |

| 88 | GPT NeoX | Further improved version of GPT Neo | An advanced version of GPT Neo that incorporates additional enhancements and optimizations for natural language processing tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 89 | RemBERT | Transformer model for code | A transformer-based model specifically designed for code-related tasks, such as code generation and understanding. | Code Generation, Code Understanding | - |

| 90 | RoCBert | Robustly optimized Chinese BERT variant | A Chinese language variant of RoBERTa, optimized for various NLP tasks in Chinese text. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 91 | TAPAS | Table Parsing via Transformer | A transformer-based model designed for table parsing, enabling natural language queries over tabular data. | Table Parsing, Question Answering (QA) over Tabular Data | - |

| 92 | UPerNet | Unified Perceptual Parsing Network | A unified perceptual parsing network based on the transformer model, designed for image segmentation tasks. | Semantic Segmentation, Image Parsing | - |

| 93 | Vision Transformer (ViT) | Transformer-based model for image classification | A transformer-based model designed for image classification tasks, replacing convolutional layers with self-attention. | - | Image Classification, Object Detection, Semantic Segmentation |

| 94 | Wav2Vec2 | Self-supervised Audio Representation Learning | A transformer-based model for self-supervised audio representation learning, capturing phonetic information. | Speech Recognition, Speech Representation Learning | - |

| 95 | PLBart | Pre-trained Language model for BART | A pre-trained variant of BART (Bidirectional and AutoRegressive Transformers) for various NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 96 | DiT | Vision transformer for image classification | A transformer-based model for image classification tasks that applies vision transformers to process image data. | - | Image Classification, Object Detection, Semantic Segmentation |

| 97 | DPR | Dense Passage Retrieval | A transformer-based model for dense passage retrieval, enabling efficient and accurate retrieval of relevant passages. | Passage Retrieval, Document Ranking | - |

| 98 | GLPN | Learning global-local patterns in natural language processing | A transformer-based model that captures both global and local patterns in text for various natural language processing tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 99 | LeViT | Vision transformer with less computations | A vision transformer model that reduces computational requirements by using fewer computations. | - | Image Classification, Object Detection, Semantic Segmentation |

| 100 | NAT | Neural Architecture Transformer | A transformer-based model that learns to design neural architectures for various tasks. | Neural Architecture Search, AutoML | - |

| 101 | TAPEX | Transformer model for text and program execution | A transformer-based model capable of executing programs described in natural language text. | Text-to-Program Execution, Natural Language Processing | - |

| 102 | VideoMAE | Video Motion Analysis Encoder | A transformer-based model for video motion analysis tasks, encoding motion information in videos. | - | Video Motion Analysis, Action Recognition, Video Understanding |

| 103 | Wav2Vec2-Conformer | Conformer-based variant of Wav2Vec2 | A variant of Wav2Vec2 that incorporates Conformer architecture, improving its performance on speech-related tasks. | Speech Recognition, Speech Representation Learning | - |

| 104 | CLIP | Image-text matching and zero-shot learning | A transformer-based model that learns to match images and text, enabling zero-shot learning capabilities. | - | Image-Text Matching, Zero-Shot Learning |

| 105 | XLS-R | Cross-lingual Speech Recognition | A transformer-based model for cross-lingual speech recognition, trained on multilingual speech data. | Cross-lingual Speech Recognition | - |

| 106 | Audio Spectrogram Transformer | Processing audio spectrograms | A transformer model specifically designed for processing audio spectrograms. | Automatic Speech Recognition (ASR), Sound Classification | - |

| 107 | M2M100 | Multilingual Multimodal Transformer | A transformer-based model capable of multilingual and multimodal tasks, trained on 100 different languages. | Machine Translation, Multilingual Text Classification, Multimodal Tasks | - |

| 108 | MEGA | Multilingual Language Generation with Transformers | A transformer-based model for multilingual language generation tasks, capable of producing text in multiple languages. | Text Generation, Text Completion, Multilingual Language Modeling | - |

| 109 | BEiT | Vision transformer for image classification | Combines concepts from CNNs and transformers for image classification tasks. | - | Image Classification, Object Detection, Semantic Segmentation |

| 110 | BigBird-Pegasus | Text generation and summarization | A variant of the Pegasus model that incorporates the BigBird sparse attention mechanism. | Text Generation, Summarization | - |

| 111 | BigBird-RoBERTa | Classification and named entity recognition | A variant of the RoBERTa model that incorporates the BigBird sparse attention mechanism. | Classification, Named Entity Recognition (NER) | - |

| 112 | CLIPSeg | Image segmentation | A transformer-based model for image segmentation tasks. | - | Image Segmentation |

| 113 | DPT | Object detection and instance segmentation with deformable attention | A transformer-based model for object detection and instance segmentation tasks, incorporating deformable attention mechanisms. | - | Object Detection, Instance Segmentation |

| 114 | Perceiver IO | Perceiver with Input/output processing | A transformer model architecture that handles input and output processing jointly, enabling cross-modal tasks. | Multimodal Tasks | - |

| 115 | Reformer | Memory-efficient Transformer | A transformer model variant designed to be more memory-efficient by using reversible layers. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 116 | RoFormer | Robustly optimized Transformer variant for images | A transformer-based model specifically designed for image-related tasks, leveraging self-attention mechanisms. | - | Image Classification, Object Detection, Semantic Segmentation |

| 117 | Swin Transformer | Shifted Window Transformer | A transformer model that uses shifted windows to capture long-range dependencies in images. | - | Image Classification, Object Detection |

| 118 | TrOCR | Transformer-based OCR model | A transformer-based model designed for Optical Character Recognition (OCR) tasks, converting images to text. | Optical Character Recognition (OCR) | - |

| 119 | Wav2Vec2Phoneme | Phoneme-level variants of Wav2Vec2 | Phoneme-level variants of Wav2Vec2 designed for speech recognition tasks at the phoneme level. | Phoneme-level Speech Recognition | - |

| 120 | X-CLIP | Cross-modal Learning with CLIP | A transformer-based model for cross-modal learning, incorporating the CLIP framework. | - | Vision-Language Tasks, Cross-modal Learning |

| 121 | XLSR-Wav2Vec2 | Cross-lingual Speech Representation | A variant of Wav2Vec2 trained for cross-lingual speech representation learning. | Cross-lingual Speech Representation | - |

| 122 | Blenderbot | Conversational AI chatbot | A chatbot model designed for multi-turn conversations that combines language and dialogue understanding. | - | - |

| 123 | BlenderbotSmall | Conversational AI chatbot | A smaller version of Blenderbot, designed for multi-turn conversations with language and dialogue understanding capabilities. | - | - |

| 124 | BLIP | Image classification and image captioning | A transformer-based model for image classification and image captioning tasks. | - | Image Classification, Image Captioning |

| 125 | ByT5 | Text translation, classification, and question answering | A transformer-based model trained on T5 architecture, suitable for text translation, classification, and question answering tasks. | Translation, Text Classification, Question Answering (QA) | - |

| 126 | CvT | Cross Vision and Transformer | A transformer-based model that combines vision and language understanding, enabling cross-modal tasks in computer vision. | - | Image-Text Matching, Vision-Language Tasks |

| 127 | DeBERTa-v2 | Improved version of DeBERTa | An updated version of DeBERTa with improved performance and compatibility for various NLP tasks. | Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 128 | DeiT | Vision transformer for image classification | A vision transformer model designed for image classification tasks. | - | Image Classification, Object Detection, Semantic Segmentation |

| 129 | GroupViT | Vision transformer with group-based operations | A vision transformer model that incorporates group-based operations to enhance its representation capacity. | - | Image Classification, Object Detection, Semantic Segmentation |

| 130 | LayoutLMv2 | Improved version of LayoutLM for document analysis | An enhanced version of LayoutLM with improved performance and additional capabilities for document analysis. | Document Understanding, OCR, Named Entity Recognition (NER) | - |

| 131 | MaskFormer | Masked Language Modeling with Transformers | A transformer-based model architecture for masked language modeling tasks, such as pretraining BERT. | Language Modeling, Pretraining BERT | - |

| 132 | SegFormer | Segmentation Transformer for computer vision | A transformer-based model designed for image segmentation tasks in computer vision. | Semantic Segmentation, Object Detection | - |

| 133 | Time Series Transformer | Transformer model for time series data | A transformer-based model designed specifically for time series data analysis and forecasting tasks. | Time Series Forecasting, Anomaly Detection, Sequence Modeling | - |

| 134 | TimeSformer | Time Series Transformer for video analysis | A transformer-based model for video analysis and action recognition tasks, leveraging temporal information. | - | Video Action Recognition, Temporal Modeling |

| 135 | Trajectory Transformer | Transformer model for trajectory forecasting | A transformer-based model designed for trajectory forecasting tasks, such as predicting object movement. | Trajectory Forecasting, Object Movement Prediction | - |

| 136 | UniSpeech | Unified Speech Recognition and Synthesis Transformer | A unified transformer-based model for both speech recognition and speech synthesis tasks. | Speech Recognition, Text-to-Speech Synthesis | - |

| 137 | UniSpeechSat | Self-supervised pre-training for UniSpeech | A self-supervised pre-training method for UniSpeech, improving its performance on speech-related tasks. | Speech Recognition, Text-to-Speech Synthesis | - |

| 138 | ALIGN | Joint representation learning for textual and tabular data | Enables joint representation learning by aligning textual and tabular data. | Text-Tabular Alignment, Joint Representation Learning | - |

| 139 | BORT | Language modeling and reinforcement learning | A transformer-based model for language modeling and reinforcement learning tasks. | Language Modeling, Text Generation | - |

| 140 | DePlot | Data visualization | A transformer-based model that generates interactive and informative visualizations from data. | Data Visualization | - |

| 141 | DETA | Document extraction and text analysis | A transformer-based model for document extraction, information retrieval, and text analysis tasks. | Document Extraction, Information Retrieval, Text Analysis | - |

| 142 | DiNAT | Network traffic anomaly detection | A transformer-based model for network traffic anomaly detection, specifically designed for cybersecurity applications. | Network Traffic Analysis, Anomaly Detection | - |

| 143 | Jukebox | Music generation with transformers | A transformer-based model architecture for generating music with various styles and genres. | Music Generation | - |

| 144 | mBART-50 | Compact version of mBART for resource-constrained | A compact version of mBART with reduced parameters and computational requirements. | Text Generation, Text Completion, Multilingual Language Modeling | - |

| 145 | Nyströmformer | Approximating Full Transformers with Nyström | A transformer variant that approximates full self-attention using the Nyström method for efficiency. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 146 | ViT Hybrid | Hybrid Architecture of Vision Transformer | A hybrid architecture that combines vision transformer with convolutional neural networks for image understanding. | - | Image Classification, Object Detection, Semantic Segmentation |

| 147 | X-MOD | Cross-modal Language Modeling | A transformer-based model for cross-modal language modeling, integrating vision and text. | - | Vision-Language Tasks, Cross-modal Language Modeling |

| 148 | BARTpho | Text-to-speech synthesis | A variant of BART model for text-to-speech synthesis tasks. | Text-to-Speech Synthesis | - |

| 149 | BridgeTower | Language modeling and text generation | A transformer-based model for language modeling and text generation tasks. | Text Generation, Language Modeling | - |

| 150 | CodeGen | Code generation | A transformer-based model for generating code. | Code Generation | - |

| 151 | GPT-J | Japanese language variant of GPT-2 | A version of GPT-2 specifically designed and trained for Japanese language understanding and generation tasks. | Japanese Language Processing, Text Generation | - |

| 152 | LLaMA | Label-agnostic learning with transformers | A transformer-based model that learns to perform tasks without explicit labels, leveraging self-supervision. | Self-Supervised Learning, Representation Learning, Clustering | - |

| 153 | MarkupLM | Transformer for document structure understanding | A transformer-based model for understanding document structure and semantic relationships in text. | Document Structure Understanding, Semantic Analysis | - |

| 154 | PoolFormer | Pooling-based Vision Transformer | A vision transformer model that incorporates pooling operations for handling images of varying sizes. | - | Image Classification, Object Detection, Semantic Segmentation |

| 155 | QDQBert | Query-Doc Bidirectional Transformer | A transformer model specifically designed for query-document ranking and retrieval tasks. | Information Retrieval, Question Answering, Document Ranking | - |

| 156 | ViLT | Vision-and-Language Transformer | A transformer-based model that combines vision and language understanding for multimodal tasks. | - | Vision-Language Tasks, Image Captioning, Visual Question Answering (VQA) |

| 157 | BARThez | Text generation and summarization | A variant of BART model trained specifically for the French language. | Text Generation, Summarization | - |

| 158 | Donut | Anomaly detection in time series data | A transformer-based model for detecting anomalies in time series data, suitable for various applications such as monitoring systems. | - | Anomaly Detection, Time Series Analysis |

| 159 | ImageGPT | Image generation with transformers | A transformer-based model architecture for generating images based on text prompts. | - | Image Generation |

| 160 | OPT | Optimization Pretraining Transformer | A transformer model pre-trained for optimization tasks, such as combinatorial optimization and planning. | Combinatorial Optimization, Planning | - |

| 161 | Splinter | Speech and Language Integrated Transformer | A transformer-based model designed for integrating speech and language tasks. | Speech-to-Text Conversion, Speech Recognition, Natural Language Processing | - |

| 162 | XGLM | Cross-lingual Language Modeling | A transformer-based model for cross-lingual language modeling, learning representations across languages. | Cross-lingual Language Modeling | - |

| 163 | YOSO | You Only Speak Once | A transformer-based model for low-resource machine translation, using only monolingual data. | Low-resource Machine Translation | - |

| 164 | EfficientFormer | Efficient transformer architecture for sequence modeling | A transformer-based model architecture designed to improve efficiency and performance for sequence modeling tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Machine Translation | - |

| 165 | ESM | Protein structure prediction | A transformer-based model for predicting the 3D structure of proteins from their amino acid sequences. | Protein Structure Prediction, Bioinformatics | - |

| 166 | Mask2Former | Transformer-based masked image inpainting | A transformer-based model for masked image inpainting, reconstructing missing parts of an image. | - | Image Inpainting |

| 167 | MGP-STR | Music Generation with Pre-trained Model | A pre-trained model for generating music, leveraging a transformer-based architecture. | Music Generation | - |

| 168 | NLLB | Natural Language Logic Board | A model that combines natural language understanding and symbolic logic reasoning for language understanding. | Natural Language Understanding, Logic Reasoning | - |

| 169 | T5v1.1 | Version 1.1 of the Text-to-Text Transfer Transformer | An updated version of the T5 model with improvements and enhancements for better performance. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 170 | TVLT | Tiny Vision-Language Transformer | A compact vision-language transformer model designed for efficient processing of vision and language inputs. | - | Vision-Language Tasks, Image Captioning, Visual Question Answering (VQA) |

| 171 | WavLM | Language Modeling for Speech | A transformer-based model for language modeling on speech data. | Speech Language Modeling | - |

| 172 | XLM-RoBERTa-XL | Cross-lingual Language Representation | A larger variant of XLM-RoBERTa for cross-lingual language representation learning. | Cross-lingual Language Representation | - |

| 173 | Chinese-CLIP | Chinese language processing and image-text matching | A transformer-based model designed for Chinese language processing and image-text matching tasks. | Chinese Language Processing, Image-Text Matching | - |

| 174 | CLAP | Image-text representation learning | A transformer-based model for learning joint image-text representations. | - | Image-Text Representation Learning |

| 175 | Decision Transformer | Decision-making tasks | A transformer-based model designed for decision-making tasks that require complex reasoning and inference. | Decision-Making, Reasoning, Inference | - |

| 176 | BLIP-2 | Image classification | An updated version of BLIP, specializing in image classification tasks. | - | Image Classification |

| 177 | CANINE | Document classification | A transformer-based model for document classification tasks. | Document Classification | - |

| 178 | Graphormer | Graph representation learning with transformers | A transformer-based model architecture specifically designed for graph representation learning. | Graph Representation Learning, Node Classification, Graph Classification, Graph Generation | - |

| 179 | I-BERT | Incremental learning with transformers | A transformer-based model architecture that supports incremental learning, allowing continual model updates. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 180 | MatCha | Matching Challenge Transformer | A transformer-based model for solving matching challenge tasks, such as natural language inference. | Natural Language Inference, Textual Entailment | - |

| 181 | mLUKE | Multilingual Language Understanding with Knowledge | A multilingual model that incorporates knowledge-based entities for language understanding tasks. | Named Entity Recognition (NER), Relation Extraction, Knowledge Graph Completion | - |

| 182 | MobileViT | Vision Transformer for Mobile and Edge Devices | A mobile-friendly version of Vision Transformer, optimized for efficient deployment on mobile and edge devices. | - | Image Classification, Object Detection, Semantic Segmentation |

| 183 | OWL-ViT | Object-Wide Learning Vision Transformer | A vision transformer model designed for object detection and recognition tasks in computer vision. | Object Detection, Object Recognition | - |

| 184 | SpeechT5 | T5-based model for Speech-to-Text | A transformer-based model trained for speech-to-text conversion tasks using the T5 architecture. | Speech-to-Text Conversion | - |

| 185 | Swin Transformer V2 | Advanced version of Swin Transformer | An advanced version of the Swin Transformer model, incorporating improvements for better performance in vision tasks. | - | Image Classification, Object Detection, Semantic Segmentation |

| 186 | ViTMAE | Vision Transformer for Multi-label Image Classification | A vision transformer model designed specifically for multi-label image classification tasks. | - | Multi-label Image Classification |

| 187 | BLOOM | Language modeling and text generation | A transformer-based model designed for language modeling and text generation tasks. | Text Generation, Language Modeling | - |

| 188 | ConvNeXTV2 | Language modeling and text generation | An improved version of ConvNeXT for language modeling and text generation tasks. | Language Modeling, Text Generation | - |

| 189 | CPM-Ant | Chinese language processing and text generation | An enhanced version of CPM with better performance and compatibility for Chinese language processing and text generation tasks. | Chinese Language Processing, Text Generation | - |

| 190 | GPT-Sw3 | Swedish language variant of GPT | A version of GPT specifically designed and trained for Swedish language understanding and generation tasks. | Swedish Language Processing, Text Generation | - |

| 191 | LongT5 | Text-to-Text Transfer Transformer | A transformer-based model for text-to-text transfer learning, capable of performing various NLP tasks. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 192 | OneFormer | Transformer for Text-to-Text Transfer Learning | A transformer-based model designed for text-to-text transfer learning tasks across multiple languages. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Question Answering (QA) | - |

| 193 | Table Transformer | Transformer model for table-related tasks | A transformer-based model specifically designed for table-related tasks, such as table understanding and extraction. | Table Understanding, Table Extraction | - |

| 194 | VAN | Vision-Adaptive Transformer for Video Analysis | A transformer model designed specifically for video analysis tasks, adapting to the dynamic visual context. | - | Video Classification, Action Recognition, Video Understanding |

| 195 | AltCLIP | Predicting the relationship between two images | A transformer-based model that learns to predict the relationship between two images. | - | Image-Text Matching, Vision-Language Tasks |

| 196 | MVP | Multimodal Variational Pretraining | A multimodal pretraining framework that combines text and image modalities for various downstream tasks. | Multimodal Tasks | - |

| 197 | NLLB-MOE | Natural Language Logic Board with MOE | An enhanced version of NLLB that incorporates Mixture of Experts (MOE) for improved performance. | Natural Language Understanding, Logic Reasoning | - |

| 198 | PEGASUS-X | Large-Scale Pre-training for Abstractive Summarization | A variant of Pegasus with larger model capacity, trained on a large-scale corpus for abstractive summarization. | Text Summarization | - |

| 199 | Swin2SR | Swin Transformer for Super-Resolution | A variant of the Swin Transformer model specifically designed for super-resolution tasks in computer vision. | - | Super-Resolution Image Reconstruction |

| 200 | UL2 | Unsupervised Language Learning | A transformer-based model designed for unsupervised language learning tasks, leveraging self-supervised learning techniques. | Language Modeling, Text Representation Learning | - |

| 201 | ViTMSN | Vision Transformer with Masked Spatial Neurons | A vision transformer model with masked spatial neurons, enabling better spatial representation learning. | - | Image Classification, Object Detection, Semantic Segmentation |

| 202 | YOLOS | You Only Learn One Sentence | A transformer-based model that learns sentence representations for zero-shot classification. | Zero-shot Text Classification | - |

| 203 | FLAN-T5 | Fast and lightweight adapter-based transformers for T5 | A transformer-based model architecture that enables efficient and lightweight adaptation of T5 models. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Machine Translation | - |

| 204 | GPT NeoX Japanese | Japanese language variant of GPT NeoX | A version of GPT NeoX specifically designed and trained for Japanese language understanding and generation tasks. | Japanese Language Processing, Text Generation | - |

| 205 | LayoutLMv3 | Further improved version of LayoutLM for documents | An advanced version of LayoutLM that incorporates additional enhancements and optimizations. | Document Understanding, OCR, Named Entity Recognition (NER) | - |

| 206 | FLAN-UL2 | Fast and lightweight adapter-based transformers for UL2 | A transformer-based model architecture that enables efficient and lightweight adaptation of UL2 models. | Text Classification, Named Entity Recognition (NER), Sentiment Analysis, Machine Translation | - |

| 207 | FLAVA | Fluency and acceptability evaluation for machine translation | A transformer-based model that evaluates the fluency and acceptability of machine translations. | Machine Translation Evaluation | - |

Conclusion#

The purpose of this article is to give you a general understanding of the capabilities of the Transformer architecture. It is now up to you to decide which architecture is most suitable to your needs based on the task you have in front of you. Afterwards, you can use hugginface or tfhub to see if there are already models that have been trained using these architectures. The chances are that you will be able to complete your work using zero-shot transfer learning are high.

Comments: