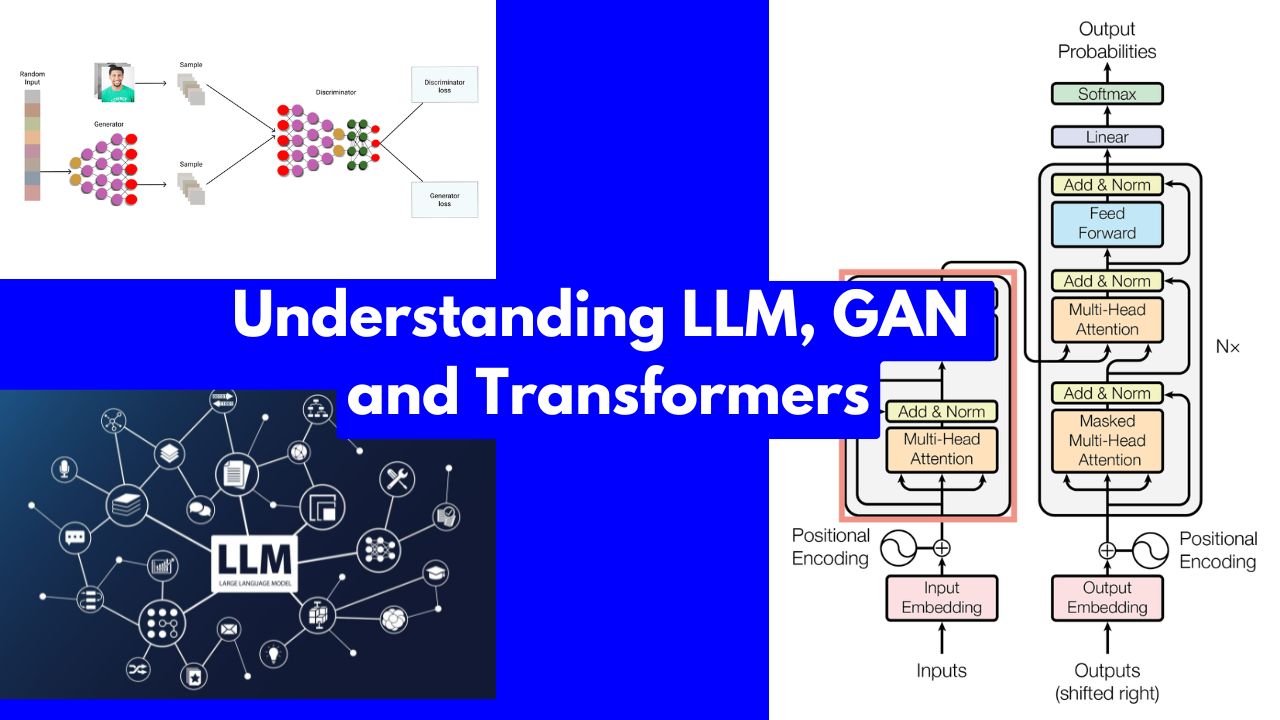

Understanding LLM, GAN and Transformers#

LLM Layers#

Large Language Models (LLMs) are typically based on Transformer architectures, which consist of several types of layers that work together to process and generate text. Here are the primary kinds of layers found in an LLM:

Embedding Layers:

- Token Embedding Layer: Converts input tokens (words, subwords, or characters) into dense n dimensional vectors.

- Position Embedding Layer: Adds positional information to the token embeddings, allowing the model to understand the order of tokens.

Transformer Encoder Layers: This layer is found in models, which are designed for generating encoded represenration of the input.

- Self-Attention Layer: Computes attention scores to capture dependencies between tokens in the input sequence. It allows the model to weigh the importance of different tokens.

- Feedforward Layer: Applies a fully connected neural network to each token independently, often consisting of two linear transformations with a non-linear activation function (e.g., ReLU) in between.

- Layer Normalization: Normalizes the inputs to each sub-layer, improving training stability.

- Residual Connections: Adds the input of each sub-layer to its output, facilitating gradient flow and improving convergence.

Transformer Decoder Layers: This layer is found in models, which are designed for sequence-to-sequence tasks like translation.

- Masked Self-Attention Layer: Similar to the self-attention layer in the encoder but with masking to prevent attending to future tokens, ensuring autoregressive generation.

- Encoder-Decoder Attention Layer: Computes attention scores between the decoder tokens and the encoder’s output tokens, allowing the decoder to focus on relevant parts of the input sequence.

- Feedforward Layer, Layer Normalization, and Residual Connections: As in the encoder layers.

Output Layers:

- Linear Layer: Projects the final hidden states to the vocabulary size, producing logits for each token in the vocabulary.

- Softmax Layer: Converts the logits to probabilities, providing a probability distribution over the vocabulary for the next token prediction.

Specialized Layers (in some advanced LLM architectures):

- Attention Mechanisms Variants: Variants like multi-head attention, relative position encodings, and cross-attention mechanisms.

- Memory Layers: Augment the model with external memory to store and retrieve long-term dependencies.

- Sparse or Adaptive Attention: Optimize attention mechanisms for efficiency, especially in handling long sequences.

- Adapter Layers: Lightweight layers added to pre-trained models to allow efficient fine-tuning on new tasks without modifying the original model weights significantly.

Each of these layers plays a crucial role in enabling LLMs to process, understand, and generate natural language effectively. The combination of these layers, particularly the self-attention mechanism and the feedforward neural networks, is what makes the Transformer architecture so powerful for a wide range of NLP tasks.

Some Powerful LLM and their developers#

Here is a list of some recently developed large language models (LLMs) along with their developer organizations:

- GPT-4 - OpenAI

- LLaMA (Large Language Model Meta AI) - Meta (formerly Facebook)

- Claude - Anthropic

- PaLM (Pathways Language Model) - Google

- Grok - xAI (Elon Musk’s AI company)

- Mistral - Mistral AI

- Falcon - Technology Innovation Institute (TII) in Abu Dhabi

- Gemini - Google DeepMind

- Cohere Command R - Cohere

- Grok - xAI

- Megatron-Turing NLG - NVIDIA and Microsoft

- LaMDA - Google

- BLOOM - BigScience (collaborative effort)

- ERNIE - Baidu

- WuDao - Beijing Academy of Artificial Intelligence (BAAI)

- Co:here - Cohere

- T5 (Text-to-Text Transfer Transformer) - Google

- XLNet - Google and Carnegie Mellon University

- Gopher - DeepMind

- Jurassic-2 - AI21 Labs

- OPT - Meta (Facebook)

Architectures used by these LLMs#

Decoder-Only Architectures:#

- GPT-4 - OpenAI

- Claude - Anthropic

- Jurassic-2 - AI21 Labs

- OPT - Meta (Facebook)

- LLaMA - Meta (Facebook)

- Megatron-Turing NLG - NVIDIA and Microsoft

Encoder-Decoder Architectures:#

- PaLM - Google

- T5 (Text-to-Text Transfer Transformer) - Google

- Gemini - Google DeepMind

- BLOOM - BigScience (collaborative effort)

Encoder-Only Architectures:#

- LaMDA - Google

- Gopher - DeepMind

- Mistral - Mistral AI

- Falcon - Technology Innovation Institute (TII)

- ERNIE - Baidu

- WuDao - Beijing Academy of Artificial Intelligence (BAAI)

- Co:here - Cohere

- XLNet - Google and Carnegie Mellon University (Note: XLNet is a permutation-based Transformer, which can be seen as a generalization of BERT with an autoregressive component)

Different architectures are chosen based on the specific requirements and goals of the model, such as whether it is primarily for text generation (decoder-only) or understanding tasks (encoder-only), or for tasks that benefit from both (encoder-decoder).

What are different GAN architectures used in language modeling?#

GAN-based approaches for language modeling are less common compared to Transformer-based architectures due to the challenges discussed later in this artcile. However, there have been some attempts to use GANs for text generation and language modeling. Here are a few notable GAN-based models and approaches in the realm of language processing:

SeqGAN:

- Developed by: University of Illinois at Urbana-Champaign, Microsoft Research, and University of Edinburgh.

- Description: SeqGAN uses GANs for sequence generation tasks. It treats text generation as a sequential decision-making process and uses a discriminator to evaluate the quality of entire sequences.

TextGAN:

- Developed by: University of Chinese Academy of Sciences and Peking University.

- Description: TextGAN is designed for generating realistic text by incorporating a maximum-likelihood objective into the GAN framework. The model uses a discriminator to differentiate between real and generated text and optimizes the generator to produce high-quality text.

LeakGAN:

- Developed by: Peking University and Microsoft Research.

- Description: LeakGAN introduces a mechanism to “leak” the discriminator’s information to the generator during training. This helps the generator to produce more realistic and coherent text sequences by leveraging intermediate feedback from the discriminator.

RelGAN:

- Developed by: University of Chinese Academy of Sciences.

- Description: RelGAN focuses on improving the training stability and quality of text generation by using relational memory for the generator and a step-wise evaluation for the discriminator.

MaliGAN:

- Developed by: Carnegie Mellon University and Microsoft Research.

- Description: MaliGAN aims to address the mode collapse problem in GANs by introducing a new reward function for the generator that mitigates the issues of traditional GAN training in the context of text generation.

ColdGANs:

- Developed by: Facebook AI Research.

- Description: ColdGANs use a temperature-based sampling technique to improve the quality and diversity of generated text by stabilizing the training process and reducing mode collapse.

While these models represent notable attempts to apply GANs to language modeling and text generation, they have not achieved the same level of widespread adoption or success as Transformer-based architectures like GPT or BERT. The challenges associated with training GANs for discrete text data and the remarkable performance of Transformer models have led to the latter being the preferred choice for most large-scale language modeling tasks.

Why researchers are not using GAN for developing LLM?#

Generative Adversarial Networks (GANs) have been incredibly successful in generating realistic images and other types of data, but they are not typically used for large language models (LLMs) due to several challenges and limitations. Here are some key reasons why GANs are not commonly used for LLMs:

Mode Collapse:

- GANs often suffer from mode collapse, where the generator produces a limited variety of outputs. This is particularly problematic for language generation, where diversity and fluency are crucial.

Training Instability:

- Training GANs can be unstable and difficult to converge. The adversarial training process, involving both a generator and a discriminator, requires careful balancing, which is more challenging in the context of language modeling.

Discrete Nature of Language:

- Language is discrete, whereas GANs are naturally suited for continuous data like images. The discrete nature of language makes it harder to apply gradient-based optimization methods directly, which are essential for GAN training.

Evaluation Challenges:

- Evaluating the quality of generated text is inherently more subjective and challenging compared to images. Objective metrics for language generation (like BLEU, ROUGE) often do not correlate well with human judgment, making it harder to train and evaluate GANs effectively.

Large-Scale Data Requirements:

- LLMs like Transformers are pre-trained on massive amounts of text data using self-supervised learning objectives (e.g., next token prediction, masked language modeling). This approach is straightforward and scales well with large datasets, whereas GANs would require a different setup, potentially increasing complexity.

Pre-training and Fine-tuning Paradigm:

- The Transformer architecture, particularly in models like GPT and BERT, leverages a pre-training and fine-tuning paradigm that has proven to be extremely effective for a wide range of NLP tasks. This approach benefits from transfer learning, where the model can be fine-tuned on specific tasks after being pre-trained on a large corpus.

Success of Transformer-Based Architectures:

- The success of Transformer-based architectures in achieving state-of-the-art performance on numerous NLP benchmarks has set a high standard. These models have shown remarkable capability in capturing complex language patterns and dependencies, making alternative approaches like GANs less attractive.

Complexity of Language Generation Tasks:

- Language generation tasks require maintaining coherence, context, grammar, and meaning over longer sequences, which is challenging for GANs. Transformers, with their attention mechanisms, handle these requirements more effectively.

Lack of Need for Adversarial Framework:

- The adversarial framework of GANs, where a generator tries to fool a discriminator, is not inherently necessary for text generation. Other approaches, like autoregressive models (GPT) or denoising autoencoders (BERT), have proven to be more straightforward and effective for language tasks.

Given these reasons, the research and development community has predominantly focused on Transformer-based architectures for large language models, which have demonstrated superior performance and scalability for a wide range of natural language processing tasks.

If encoder is for understanding and decoder for generation then how come GPT (decoder only architecture), understands context?#

The distinction between encoder and decoder roles in Transformers is somewhat generalized. While it’s true that encoder layers are primarily designed for understanding and decoder layers for generation, the capabilities of a model like ChatGPT (which uses a decoder-only architecture) to understand and generate coherent responses come from the way these models are trained and designed.

Here’s how ChatGPT, despite being a decoder-only model, can effectively understand and respond to input:

Training Methodology#

Autoregressive Training:

- ChatGPT and similar models are trained using an autoregressive approach, where the model learns to predict the next token in a sequence given all the previous tokens. This means that during training, the model is exposed to vast amounts of text and learns to generate contextually relevant tokens based on the preceding context.

Self-Attention Mechanism:

- The self-attention mechanism allows the model to consider the entire context of the input sequence when generating each token. This enables the model to weigh the importance of different tokens and capture long-range dependencies in the text.

Context Window:

- During inference, the model takes a prompt or an input document and generates a response based on the context provided by the input tokens. The self-attention mechanism ensures that the model can “understand” the input by attending to relevant parts of the context while generating each token.

Understanding in Decoder-Only Models#

Contextual Embeddings:

- When the model generates a token, it relies on contextual embeddings that represent the entire input sequence up to that point. These embeddings capture the meaning and nuances of the input, enabling the model to generate contextually appropriate responses.

Implicit Understanding:

- Although the model doesn’t have a separate encoder for explicit understanding, the layers of the decoder effectively perform this role implicitly. The multi-layered architecture allows the model to build a deep representation of the input sequence, which includes understanding syntax, semantics, and context.

Pre-training on Diverse Data:

- Models like ChatGPT are pre-trained on diverse datasets that include a wide range of language patterns, topics, and contexts. This extensive pre-training allows the model to generalize well and understand various inputs during inference.

Fine-Tuning#

- Instruction Following:

- Fine-tuning on instruction-following datasets helps models like ChatGPT improve their ability to respond accurately to user prompts. This fine-tuning involves training the model on examples where it is required to follow specific instructions or respond to questions based on the provided context.

- Reinforcement Learning with Human Feedback (RLHF):

- Methods like RLHF further refine the model’s responses to align with human preferences, ensuring that the generated text is coherent, relevant, and contextually appropriate.

Practical Example#

- When given an input document, ChatGPT processes the entire input sequence through its self-attention mechanism, allowing it to understand the context and generate a response that is coherent and relevant. This process is similar to how a human might read and comprehend a passage before responding to questions about it.

In summary, while encoder layers are traditionally associated with understanding and decoder layers with generation, the self-attention mechanism and the autoregressive training of models like ChatGPT enable them to effectively understand and generate text, making them versatile in both roles.

Author

Dr Hari Thapliyaal

dasarpai.com

linkedin.com/in/harithapliyal

Comments: