Demystifying DevOps, MLOps, and DataOps:#

Bridging the Gap between Software Development, Machine Learning, and Data Managemen

Introduction#

What is DevOps#

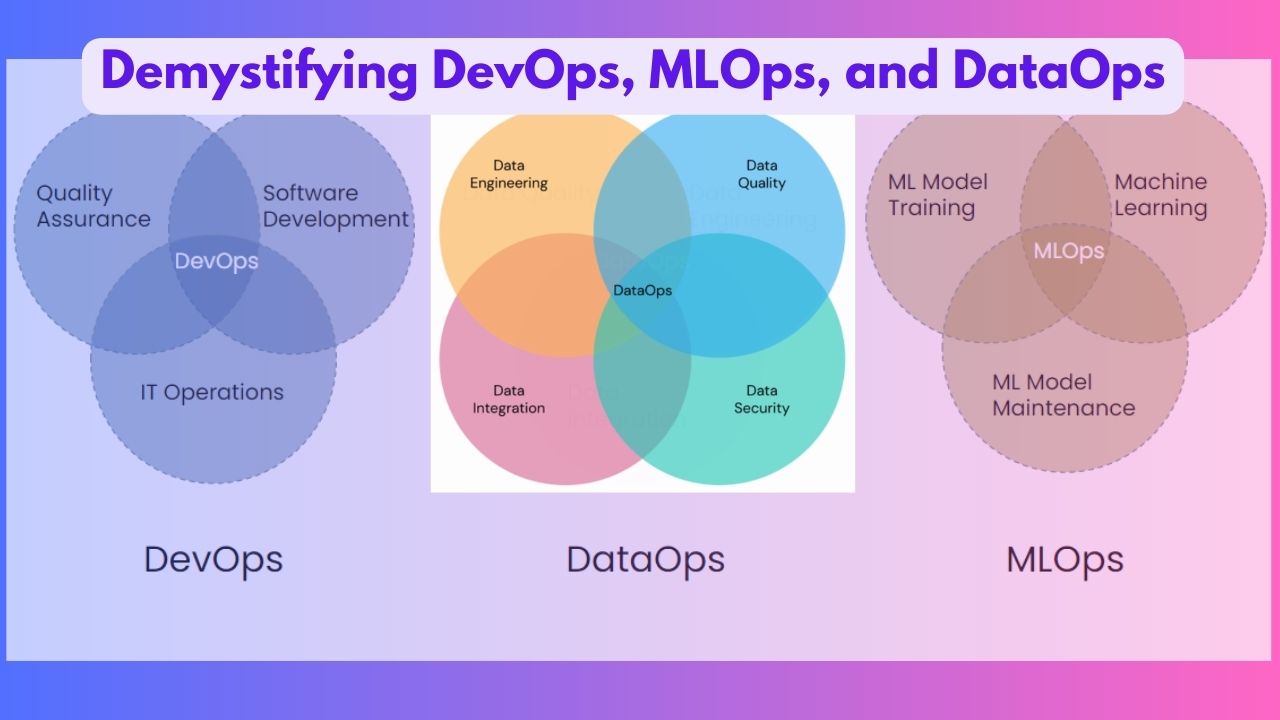

DevOps, short for Development and Operations, is a set of practices, principles, and cultural philosophies that aim to improve collaboration and efficiency between software development teams and IT operations teams. It emphasizes the integration of software development and IT operations, breaking down traditional silos and fostering a collaborative approach throughout the entire software delivery lifecycle.

The main goal of DevOps is to enable organizations to deliver high-quality software products more rapidly, reliably, and frequently. It achieves this by promoting automation, continuous integration and delivery (CI/CD), close collaboration and communication, and a focus on measuring and improving key performance indicators (KPIs).

Key principles and practices associated with DevOps are Collaboration, Version Control, Automation, CI/CD, IaC, Monitoring and Logging, and Agile & Lean Practices.

What is MLOps#

MLOps, short for Machine Learning Operations, is a set of practices, processes, and technologies that aim to operationalize and manage machine learning models in production environments. It combines principles from machine learning, data engineering, and DevOps to ensure the reliable deployment, monitoring, and maintenance of machine learning systems.

MLOps addresses the challenges that arise when transitioning from developing and training machine learning models in isolated environments to deploying and maintaining them at scale in production. It focuses on automating and streamlining tasks related to data preparation, model training, model deployment, monitoring, and retraining.

Key principles and practices associated with MLOps are Collaboration, Version Control, CI/CD, Infrastructure as Code (IaC), Model Monitoring, Experiment Tracking, Model Deployment and Serving, Governance and Compliance.

What is DataOps#

DataOps, short for Data Operations, is a set of practices, processes, and technologies that focus on the integration, management, and delivery of data in an organization. It is an emerging discipline that combines principles from data management, data integration, and DevOps to ensure efficient and reliable data operations throughout the data lifecycle.

DataOps aims to address the challenges associated with managing and operationalizing data in modern data-driven organizations. It emphasizes collaboration, automation, and agility in handling data, enabling organizations to make informed decisions, drive innovation, and deliver value from their data assets.

Key principles and practices associated with MLOps are Data Integration and Pipelines, Data Quality and Governance, Data Versioning and Lineage, Data Catalog and Metadata Management, Self-Service Data Acces, Data Security and Privacy, Collaboration and Communication.

Summary of DevOps, MLOps and DataOps: Comparision#

| Aspect | DevOps | MLOps | DataOps |

|---|---|---|---|

| Use Cases | Software development, application deployment | Machine learning model deployment and monitoring | Data integration, analytics, data-driven decision |

| Benefits | Faster software development and deployment | Efficient model deployment, monitoring, scaling | Improved data quality, governance, efficiency |

| Challenges | Balancing speed and stability, cultural adoption | Model reproducibility, scalability, model drift | Data quality, data governance, data integration |

| Focus | Software development and operations | Machine learning operations and deployment | Data operations and management |

| Objective | Streamlining collaboration and delivery | Efficient and scalable ML model deployment | Effective data integration and management |

| Purpose | Automating software development and deployment | Automating ML model development and deployment | Automating data operations and workflows |

| Key Components | Continuous integration, delivery, and deployment | Model versioning, monitoring, and lifecycle | Data governance, quality, and integration |

| Tools | Version control, CI/CD tools, configuration mgmt. | ML frameworks, model registries, orchestration | Data integration, data quality, workflow tools |

Various Aspects of DevOps, MLOps and DataOps#

A summary of various activities and software used to manage various aspects of DevOps, MLOps and DataOps

Version Control:#

DevOps: Manages the versioning and history of source code and configuration files, enabling collaboration, change tracking, and easy rollbacks.

MLOps: Tracks versions of machine learning models, scripts, and data artifacts, ensuring reproducibility and traceability of experiments.

DataOps: Tracks versions of data pipelines, data transformations, and data sets, facilitating data lineage and reproducibility.

Softwares : Git, SVN, Mercurial, Perforce

Continuous Integration:#

DevOps: Automates the process of integrating code changes from multiple developers, enabling early detection of integration issues.

MLOps: Integrates code changes, data preprocessing, and model training in a consistent and automated manner, ensuring the quality of ML workflows.

DataOps: Integrates data ingestion, processing, and validation steps, promoting a continuous flow of high-quality data.

Softwares : Jenkins, Travis CI, CircleCI, Bamboo, GitLab CI

Configuration Management:#

DevOps: Manages and automates the configuration of software systems, including infrastructure settings, environment variables, and application settings.

MLOps: Tracks and manages the configuration of ML pipelines, including data sources, preprocessing steps, hyperparameters, and model settings.

DataOps: Manages the configuration of data workflows, including data sources, transformations, schema mappings, and data quality checks.

Softwares : Ansible, Chef, Puppet, SaltStack

Containerization:#

DevOps: Uses containerization platforms like Docker to package applications and their dependencies, ensuring consistent deployment across different environments.

MLOps: Containerizes ML models and their dependencies, enabling portability, reproducibility, and scalability of model deployments.

DataOps: Containerizes data processing and analytics tools, facilitating reproducible data workflows and easier deployment of data services.

Softwares : Docker, Kubernetes, OpenShift, AWS ECS, Google Kubernetes Engine

Monitoring/Logging:#

DevOps: Monitors the health, performance, and availability of applications and infrastructure, and logs important events and metrics for troubleshooting and analysis.

MLOps: Monitors the performance and behavior of deployed ML models, capturing metrics and logs for model evaluation, drift detection, and debugging.

DataOps: Monitors data pipelines and data services, ensuring data quality, detecting anomalies, and providing visibility into the data processing workflow.

Softwares : Prometheus, Grafana, ELK Stack (Elasticsearch, Logstash, Kibana)

Model Serving:#

DevOps: Facilitates the deployment and scaling of application services to serve end-users.

MLOps: Enables the deployment, scaling, and management of machine learning models in production, exposing them as APIs for predictions or inference.

DataOps: Supports the deployment and availability of data services, including data access, data transformation, and data provisioning APIs.

Softwares : TensorFlow Serving, TensorFlow Extended (TFX), Seldon Core

Data Integration:#

DevOps: Integrates data from various sources and systems, ensuring data consistency and availability across different applications and environments.

MLOps: Integrates data sources, preprocessing steps, and model training pipelines to create end-to-end ML workflows.

DataOps: Integrates and consolidates data from different sources and systems, enabling data transformations, enrichment, and data delivery.

Softwares : Apache Kafka, Apache NiFi, Apache Airflow, AWS Glue

Continuous Deployment:#

DevOps: Automates the deployment of applications, infrastructure, and configuration changes across various environments or stages.

MLOps: Automates the deployment of ML models and associated infrastructure, enabling seamless and reproducible model deployments.

DataOps: Automates the deployment of data pipelines, data transformations, and data services, ensuring consistent and reliable data delivery.

Softwares : AWS CloudFormation, Terraform, Kubernetes, Ansible

DevOps: Ensuring that software is always in a releasable state by automating the entire software delivery process.

ML Frameworks:#

DevOps: Provides tools and libraries for building and deploying applications, but is not typically focused on specific ML frameworks.

MLOps: Utilizes ML frameworks such as TensorFlow, PyTorch, or scikit-learn to develop and train machine learning models. MLOps frameworks like Kubeflow or MLflow provide additional capabilities for managing the ML lifecycle.

DataOps: May not directly deal with ML frameworks, but can collaborate with MLOps to ensure smooth integration of data pipelines with ML frameworks and platforms.

Softwares : TensorFlow, PyTorch, scikit-learn, MLflow, Kubeflow

Data Governance:#

DevOps: May not directly handle data governance, but can collaborate with DataOps to ensure proper management, security, and compliance of data in the software delivery process.

MLOps: Incorporates data governance practices to ensure proper data handling, privacy, security, and compliance throughout the ML workflow, especially when dealing with sensitive or regulated data.

DataOps: Enforces data governance policies and practices, including data quality, privacy, security, metadata management, and compliance with regulations like GDPR or CCPA.

Softwares : Apache Atlas, Collibra, Alation, Talend Data Catalog

Workflow Orchestration:#

DevOps: Orchestrates and automates the deployment and management of applications, infrastructure, and related processes across various stages and environments.

MLOps: Orchestrates the end-to-end ML workflow, including data ingestion, preprocessing, model training, evaluation, deployment, monitoring, and retraining.

DataOps: Orchestrates data workflows, including data ingestion, transformations, validations, enrichment, and delivery, ensuring smooth and reliable data operations.

Softwares : Apache Airflow, Luigi, Apache Oozie

Infrastructure as Code (IaC):#

DevOps: Tools and practices for managing infrastructure through code, such as Terraform or CloudFormation.

Incident Management:#

DevOps: Practices and tools for effectively managing and resolving incidents in production systems.

Experiment Tracking:#

MLOps: Capturing and organizing metadata related to machine learning experiments, including hyperparameters, metrics, and results.

Model Monitoring:#

MLOps: Continuously monitoring deployed machine learning models to detect performance degradation or drift.

Data Quality Management#

DataOps: Ensuring the quality and integrity of data throughout its lifecycle, including validation, cleansing, and anomaly detection.

Data Cataloging:#

DataOps: Creating a centralized catalog of data assets, including metadata, data lineage, and data discovery capabilities.

Data Privacy and Security:#

DataOps: Implementing measures to protect sensitive data and comply with privacy regulations.

Conclusion#

In conclusion, the fields of DevOps, MLOps, and DataOps have emerged as crucial disciplines in today’s technology landscape, addressing the challenges of rapid software development, machine learning model deployment, and effective data management.

DevOps promotes collaboration, automation, and continuous delivery to streamline software development and operations, enabling organizations to deliver high-quality software products more rapidly and reliably. It breaks down silos between development and operations teams, fostering a culture of shared responsibility and continuous improvement.

MLOps combines machine learning, data engineering, and DevOps principles to develop ML models, operationalize and manage machine learning models in production environments. By automating tasks like model training, deployment, and monitoring, MLOps ensures the reliability and scalability of machine learning systems, facilitating their seamless integration into the software delivery pipeline.

DataOps focuses on the integration, management, and delivery of data in organizations. It emphasizes collaboration, automation, and data quality to ensure efficient data operations throughout the data lifecycle. By implementing DataOps practices, organizations can derive insights from data, make informed decisions, and drive innovation.

While each discipline has its unique focus, they all share common principles such as version control, automation, collaboration, and continuous improvement. Together, DevOps, MLOps, and DataOps empower organizations to accelerate software development, deploy machine learning models at scale, and effectively manage and leverage their data assets.

By adopting these practices, organizations can overcome the challenges associated with complex software systems, machine learning deployments, and data management, ultimately enabling them to stay competitive in today’s data-driven and technology-centric landscape. Embracing the principles of DevOps, MLOps, and DataOps is essential for organizations seeking to achieve efficiency, agility, and value in their software, machine learning, and data operations.

Comments: